6.3. The Hierarchy of Evidence

By Marc Chao and Muhamad Alif Bin Ibrahim

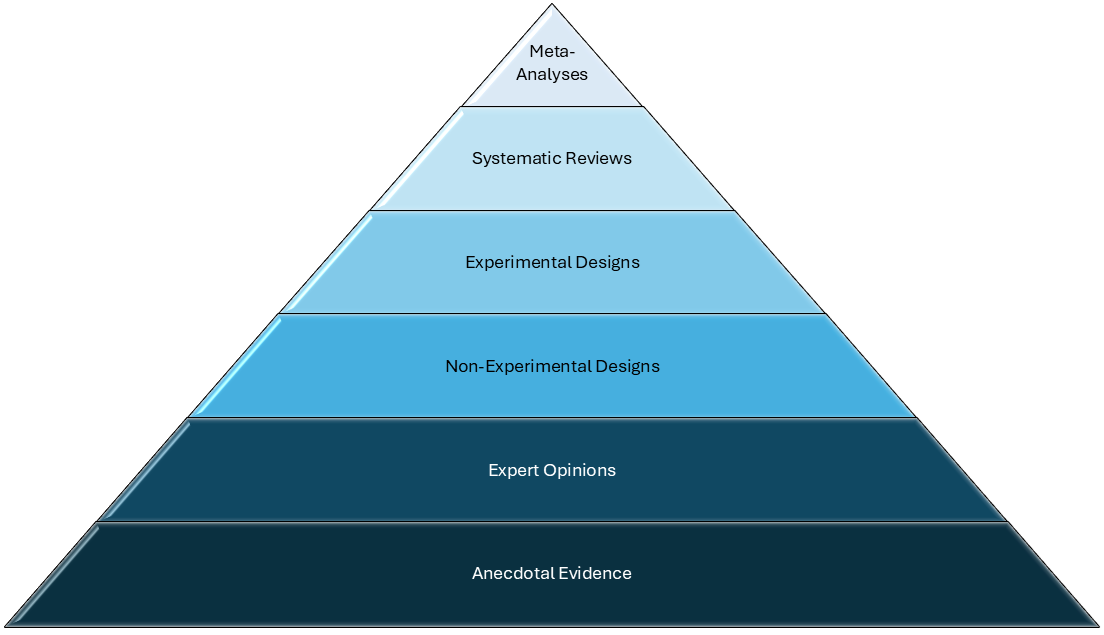

In scientific research, not all evidence carries the same weight. While every source of information may contribute something to our understanding of a topic, the reliability, validity, and overall usefulness of these sources vary significantly. At the foundation of this hierarchy (Figure 6.3.1) lie anecdotal observations and personal experiences, while at the peak stand meta-analyses and systematic reviews, which are sources that synthesise vast amounts of data to arrive at highly robust conclusions. Understanding this hierarchy is essential for anyone engaging with scientific research, as it helps prioritise the most credible and reliable sources when building arguments, making decisions, or advancing knowledge.

Anecdotal Evidence

At the base of the evidence pyramid is anecdotal evidence, which consists of personal stories, individual observations, and isolated experiences. These accounts are often emotionally compelling and memorable, but they are also inherently limited in scope and prone to biases. Anecdotes can highlight interesting phenomena and raise important questions, but they cannot provide reliable answers. For example, a person might claim that taking a particular supplement cured their anxiety, but this single observation does not account for other possible explanations, such as the placebo effect, natural recovery, or coincidental changes in their environment.

Anecdotal evidence is commonly found in everyday conversations, blog posts, social media updates, online forums, and video testimonials. Platforms like Facebook, Instagram, TikTok, and Reddit are rife with personal stories shared with the intent to convince, inspire, or simply share an experience. While these anecdotes can sometimes point toward trends or raise awareness about certain issues, they lack the systematic observation, controls, and peer review necessary to establish scientific credibility. Anecdotal evidence can serve as a spark for future research, offering initial insights or highlighting gaps in existing knowledge, but it should never be used as definitive proof of cause-and-effect relationships.

Expert Opinions

Slightly higher in the hierarchy are expert opinions, which are informed perspectives offered by individuals who possess extensive experience or specialised training in a given field. Experts often have deep knowledge of a subject, and their interpretations can provide valuable insights, especially when research is limited or emerging. However, expert opinions are still subject to bias, error, and individual limitations. They rely heavily on the expert’s perspective and may not always be backed by empirical evidence. For instance, a psychologist might propose a theory about memory consolidation based on years of clinical experience, but until that theory is tested empirically, it remains speculative. While expert opinions carry more weight than anecdotes, they still fall short of the rigour demanded by systematic scientific investigation.

Expert opinions are commonly found in narrative reviews, editorials, opinion pieces in academic journals, interviews with professionals in reputable publications, keynote speeches at conferences, and even podcasts or webinars featuring leading experts. In narrative reviews, experts synthesise existing knowledge on a topic and provide their interpretations, often drawing on their professional experience to highlight emerging trends or propose theoretical frameworks. While these sources are valuable for understanding current perspectives and identifying potential research directions, they should still be interpreted with caution, particularly when they lack supporting empirical data. Expert opinions serve as a useful guide, but they are most impactful when viewed as a starting point for further empirical investigation rather than definitive evidence.

Non-Experimental Designs

Moving further up the evidence ladder, we encounter non-experimental research designs, such as correlational and descriptive studies. These research designs aim to observe, measure, and classify relationships between variables without directly manipulating them. Correlational studies, for example, can reveal associations, like the observation that increased screen time is linked to poorer sleep quality, but they cannot establish causation. Descriptive studies, on the other hand, provide rich, detailed accounts of phenomena, such as case studies that explore the symptoms and behaviours of a single individual or group. While these designs are valuable for identifying patterns and generating hypotheses, they cannot definitively determine cause-and-effect relationships. They are best viewed as stepping stones toward more controlled experimental research.

Experimental Designs

At a higher level of credibility are experimental designs, which are broadly divided into two categories: non-randomised experimental designs and randomised controlled trials (RCTs). Both involve the direct manipulation of one or more independent variables while carefully controlling for confounding factors, but they differ in their level of control and the strength of causal conclusions they can provide.

Non-Randomised Experimental Designs

Also known as quasi-experimental designs, these approaches involve manipulating an independent variable and observing its effects on a dependent variable, but they do not include random assignment of participants to experimental and control groups. Instead, participants might be assigned based on pre-existing groups, convenience, or other factors outside of strict randomisation. These designs are often used in educational, clinical, or field research where randomisation is impractical, unethical, or impossible. For example, a school-based study might test a new teaching method by assigning one classroom to the intervention group and another to the control group based on existing class structures or administrative decisions. While quasi-experimental designs offer valuable insights and can establish causal relationships to some degree, the absence of random assignment introduces a higher risk of bias and confounding variables. Researchers must employ additional statistical controls and methodological rigour to account for these limitations when interpreting their findings.

Randomised Controlled Trials (RCTs)

At the pinnacle of experimental designs are randomised controlled trials (RCTs), which are widely regarded as the gold standard for establishing causal relationships. In an RCT, participants are randomly assigned to either an experimental group, which receives an intervention, or a control group, which may receive a placebo or standard treatment. This randomisation helps minimise bias and ensures that any observed differences between the groups are likely due to the intervention rather than external factors. For instance, in a study testing a new anxiety-reduction therapy, participants might be randomly assigned to either receive the therapy or undergo a placebo treatment. If the therapy group shows significantly greater improvements in anxiety symptoms, researchers can confidently attribute the effect to the intervention itself. RCTs are especially valuable in medical and clinical psychology research, where precision and reliability are critical for informing evidence-based practices.

Systematic Reviews

Above experimental studies sit systematic reviews, which represent a synthesis of evidence from multiple studies addressing the same research question. Researchers conducting a systematic review follow a rigorous and transparent process to search for, evaluate, and summarise all available evidence on a particular topic. This approach minimises bias by including studies with varying results and methodologies, offering a more comprehensive and balanced view of the existing evidence. For example, a systematic review of research on the effectiveness of cognitive-behavioural therapy (CBT) for treating depression might include dozens of studies from different countries, populations, and clinical settings, ultimately painting a clearer picture of CBT’s overall effectiveness.

Meta-Analyses

One step higher in reliability is the meta-analysis, which goes beyond merely summarising studies by using statistical techniques to combine data from multiple studies into a single quantitative estimate of an effect. Meta-analyses not only pool results from individual studies but also weigh them based on factors like sample size, study quality, and statistical significance. This approach allows researchers to identify patterns, measure effect sizes, and account for inconsistencies across studies. For example, a meta-analysis of studies on mindfulness-based stress reduction (MBSR) might reveal not only that MBSR is effective but also how its effects vary depending on factors such as participant age or duration of the intervention. Because of their statistical rigour and ability to aggregate large amounts of data, meta-analyses are considered one of the most robust forms of evidence available.

At the pinnacle of the evidence hierarchy are Cochrane meta-analyses, named after the Cochrane Collaboration, a global network of researchers committed to producing high-quality, evidence-based reviews. Cochrane meta-analyses are held to exceptionally high standards of methodological transparency, reproducibility, and objectivity. Each review undergoes a meticulous process of study selection, quality assessment, and statistical analysis. These reviews are frequently updated to include the latest research, ensuring that their conclusions remain current and accurate. For example, a Cochrane meta-analysis on the efficacy of antidepressants for treating major depressive disorder would provide one of the most authoritative summaries of the available evidence, making it a trusted resource for clinicians, policymakers, and researchers alike.

Incorporating Lived Experience into Scientific Research Syntheses

This section of the book has explained the various sources of evidence for understanding the social world. It also illustrated the credibility and reliability of each source of evidence when building arguments, making decisions, or advancing knowledge. While systematic reviews and meta-analyses are placed at the top of the hierarchy of evidence in Figure 6.3.1, it must be noted that in these methods of research syntheses, the identification of research questions, review of the extant literature (existing published research), generating interpretations, and identifying implications and recommendations for research and policy making are typically done without the involvement of the individuals and communities with lived experience (Beames et al., 2021; Grindell et al., 2022). Incorporating the views and perspectives of target groups with lived experience (for example, people living with various health conditions, or those from marginalised communities) as part of the scientific research process, including research syntheses, can ensure that the research design, data collection and analysis are centred on their needs and priorities. This, in turn, can ensure that findings and recommendations generated from scientific research can benefit these target groups the most.

These co-creation, co-design, and co-production principles are integral to participatory research designs, where academics and researchers actively collaborate with patients, consumers, and other relevant stakeholders throughout the knowledge generation process (Grindell et al., 2022; Vargas et al., 2022). For example, academics and researchers can involve people with mental health issues as part of the research design process, where they collaborate on defining the appropriate research questions that drive the research and co-designing the study’s data collection tools (e.g., survey questions and interview guides). This ensures that the research being conducted is grounded in real-world needs through the inclusion of voices and perspectives from those with lived experience. Similarly, in research syntheses, individuals with mental health issues can be involved in the systematic review process by providing input on the review questions, as well as reflecting and commenting on the findings generated from the review (Beames et al., 2021). Such integrative review methods can provide additional insights into other unexplored areas within mental health and also lead to the timely development and implementation of contextually-appropriate interventions that can benefit other people with mental health issues.

References

Beames, J. R., Kikas, K., O’Gradey-Lee, M., Gale, N., Werner-Seidler, A., Boydell, K. M., & Hudson, J. L. (2021). A new normal: Integrating lived experience into scientific data syntheses. Frontiers in Psychiatry, 12, 1-4. https://doi.org/10.3389/fpsyt.2021.763005

Grindell, C., Coates, E., Croot, L., & O’Cathain, A. (2022). The use of co-production, co-design and co-creation to mobilise knowledge in the management of health conditions: A systematic review. BMC Health Services Research, 22(1), 1-26. https://doi.org/10.1186/s12913-022-08079-y

Vargas, C., Whelan, J., Brimblecombe, J., & Allendera, S. (2022). Co-creation, co-design and co-production for public health: A perspective on definitions and distinctions. Public Health Research & Practice, 32(2), 1-7. https://doi.org/10.17061/phrp3222211